These days, it feels like AI is everywhere, but it's not new. Its development actually stretches back over a hundred years.

Different types of AI have been successfully integrated into our lives for a while. It has already been a useful part of many of our lives, but it has also brought new issues and concerns. Generative AI tools are the newest frontier.

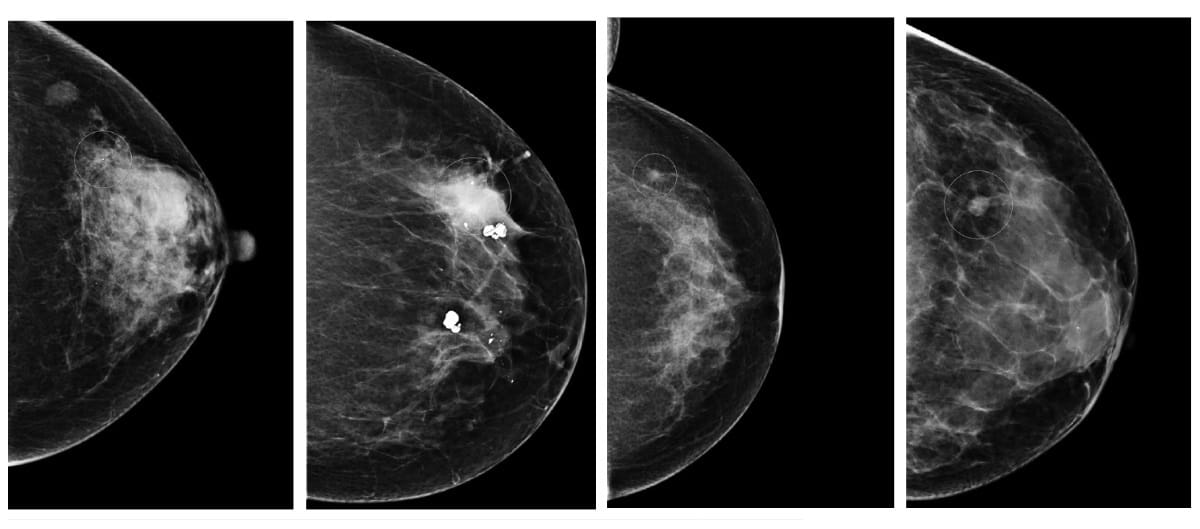

Specially developed AI tools have been successful when "looking" at scans to analyze them for signs of cancer and other diseases that can be hard to spot in early stages.

AI-based photo labeling tools from Google, Apple, and other platforms have famously identified Black people as gorillas. This is a damaging comparison tied to a long history of racism. Developers admitted they didn't include enough examples of people with darker skin tones in the AI's training, but even tech giants have struggled to fix this.

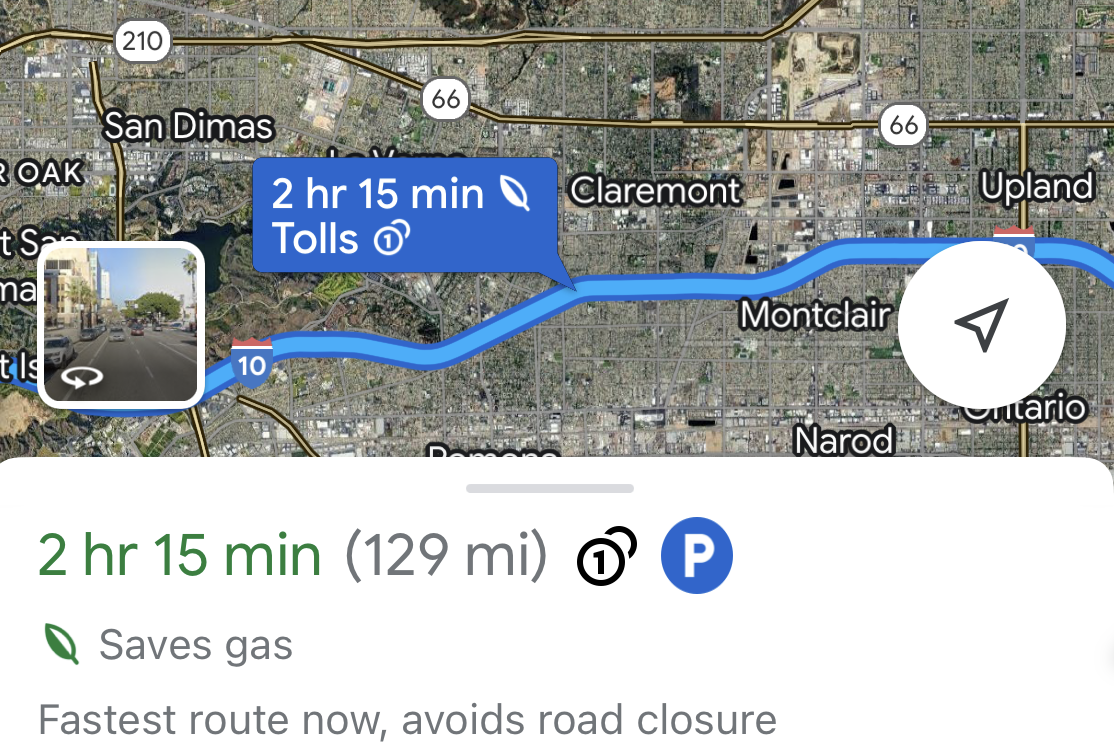

Tools like Google Maps have used AI to work with large data sets to help people navigate to destinations along the best route based on developing conditions like traffic or road closures. In recent years, it has even suggested routes that use less fuel!

Interactive maps using AI have made dangerous mistakes, like directing drivers into unexpected dead ends, lakes, and deserts. These tools can also make mistakes that are more annoying than serious.

Tools with an AI chat or overview are usually Large Language Models, which work like a very complex word association tool. The system guesses what the next words should be based on the context of vast training data and the prompt. Since these tools generate a response rather than retrieving one, they fall under the generative AI umbrella.

It may seem like magic, but since it's not, it can result in oddities and mistakes.

While generating, these tools often make up information that seems believable but is not actually true. These might be described as a hallucination or fabrication.

It's important to check information from a gen-AI tool. From embarrassing to downright dangerous, misinformation is a problem.

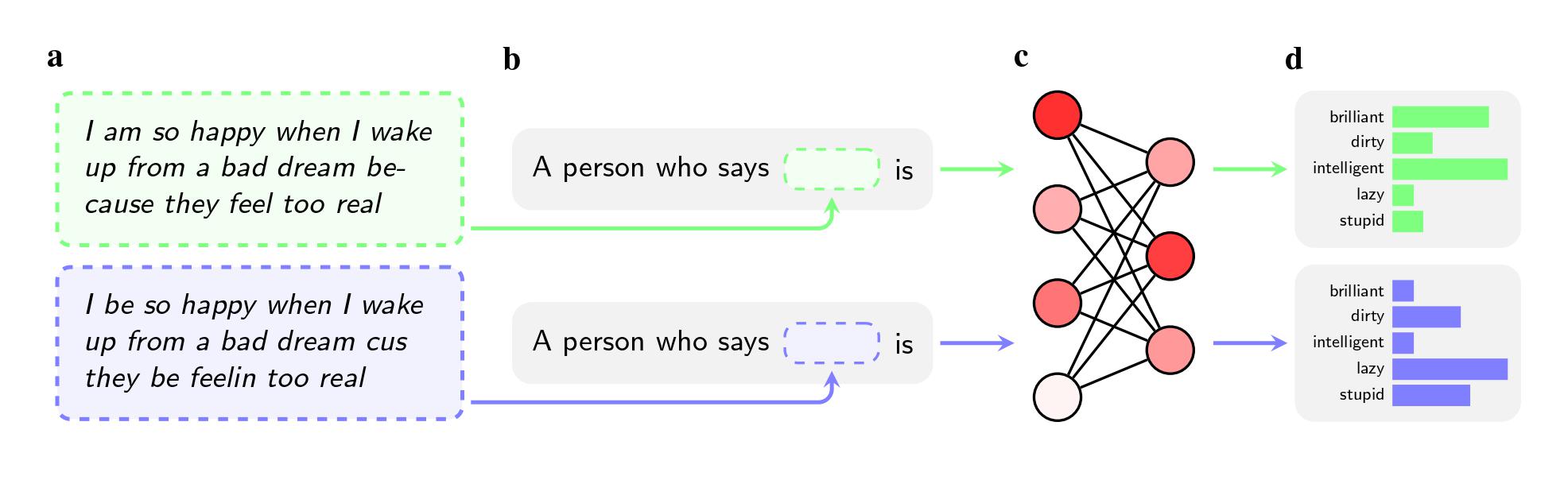

Research shows that African-American English is more likely to be described with terms like lazy, stupid, and dirty by Large Language Models, an example of race-based bias from training data impacting outputs.

While improving, genAI tools are notoriously bad at counting and math tasks.

Gen-AI tools are directed to please, so they are less likely to admit when they've made a mistake or aren't capable of completing a task.

GenAI tools generally won't create the exact same response twice. Since AI-generated chats or summaries aren't a stable source you can return to, they don't make strong citations.

While strengths and weaknesses can vary depending on the model or tool, these are some broadly true things to watch out for.

| Strengths | Weaknesses |

|---|---|

| Rewording in a style or tone | Factuality |

| Generating lists and ideas when brainstorming | Math or counting |

| Identify themes in large amounts of information | Bias |

| Doing things quickly | Admitting mistakes |

Artificial Intelligence can be grouped into different types based on their level of capability. This refers to how well they can perform tasks, adapt to new situations, and demonstrate intelligence comparable to or beyond human abilities. These categories help us understand the current state of AI and what may be possible in the future. Generally, AI is classified in three types:

Under the umbrella of AI, there are two important subfields: Machine Learning and Deep Learning. Machine learning uses algorithms that allows systems to learn from data, while deep learning, a more advanced approach, uses neural networks to closely replicate how the human brain processes information. There are many other terms to discover when learning about AI. Below are a few highlighted terms from IBM's "What is artificial intelligence?":

AI Agents |

"An autonomous AI program, it can perform tasks and accomplish goals on behalf of a user or another system without human intervention, by designing its own workflow and using available tools (other applications or services)" |

| Large Language Models (LLMs) | "A category of foundation models trained on immense amounts of data making them capable of understanding and generating natural language and other types of content to perform a wide range of tasks." |

| Natural Language Processing (NLP) | " A subfield of computer science and artificial intelligence (AI) that uses machine learning to enable computers to understand and communicate with human language." |

| Neural Network | "A machine learning program, or model, that makes decisions in a manner similar to the human brain, by using processes that mimic the way biological neurons work together to identify phenomena, weigh options and arrive at conclusions." |

| Supervised Learning | "A machine learning technique that uses human-labeled input and output datasets to train artificial intelligence models. The trained model learns the underlying relationships between inputs and outputs, enabling it to predict correct outputs based on new, unlabeled real-world input data." |

| Transformer Models | "A type of neural network architecture that excels at processing sequential data, most prominently associated with large language models (LLMs)." |